Setting Rules for AI Agents: How Key Files Determine How Far They Can Go

Setting Rules for AI Agents: How Key Files Determine How Far They Can Go

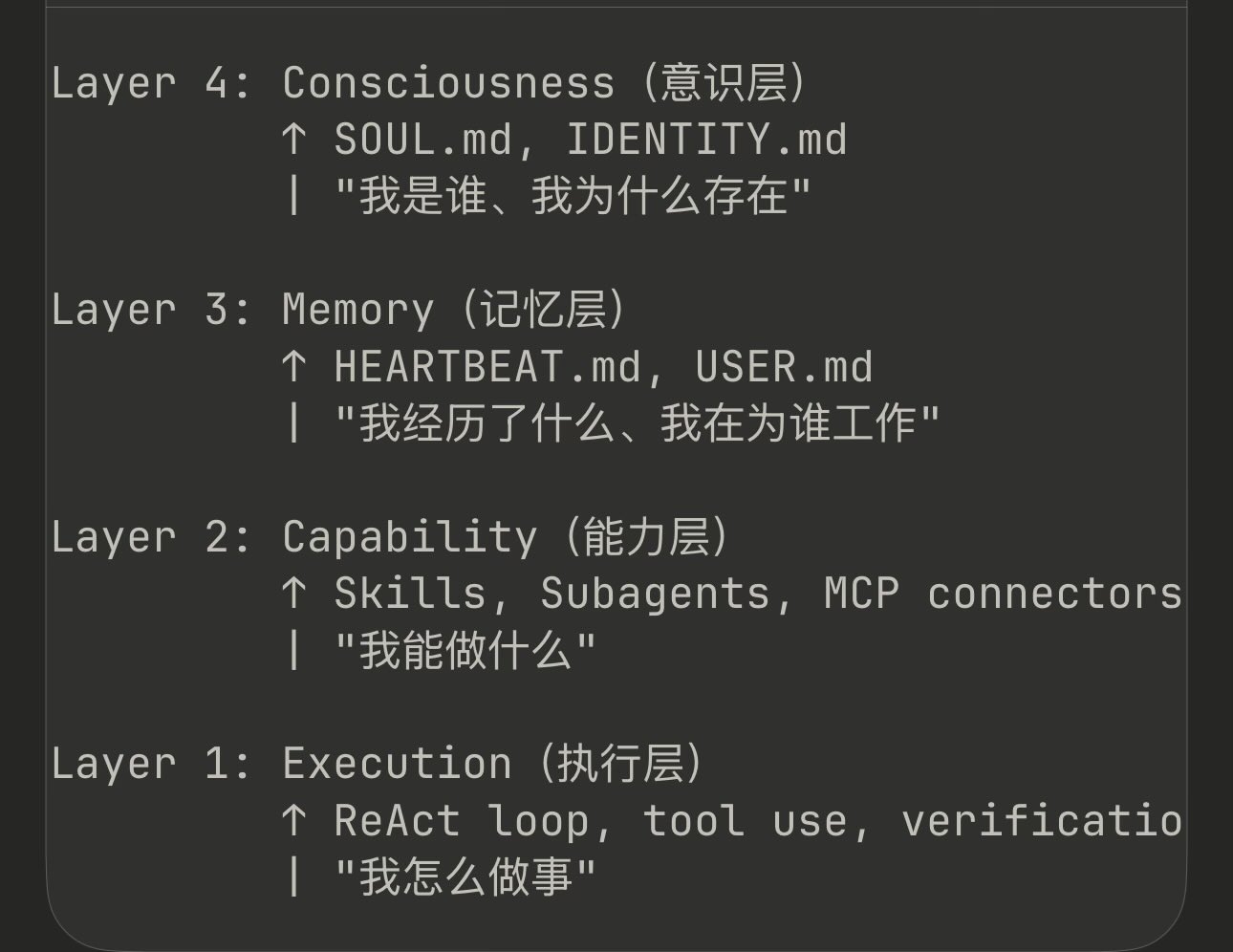

The real value of this diagram isn't in "how many layers it has", but in how it breaks down a long-running AI Agent into a set of core files that can be explicitly defined, audited, and iterated.

These files don't solve the question of "can it run", but rather:

Will it drift after running long? Will it lose control when complexity grows? Will others dare to use it?

Let's examine these key sub-concepts one by one and their real engineering value.

SOUL.md: Long-term Principles to Keep Agent on Course

What It Defines

SOUL.md is essentially not about personality, but a collection of global principles that cannot be easily overridden, such as:

- Always prioritize safety over speed

- Don't execute high-risk operations without explicit secondary confirmation

- Don't compromise system integrity to achieve goals

- Don't arbitrarily expand capabilities or modify self-configuration

Why Define It Separately

Because these principles should not be mixed in prompts, code, or memory:

- Prompts get overridden

- Memory gets polluted

- Task instructions are short-term

SOUL.md is above tasks, long-term stable, and loaded by default.

With / Without Comparison

| Without SOUL.md | With SOUL.md |

|---|---|

| Agent gets pulled by short-term goals | Agent can refuse "complete but dangerous" instructions |

| Risk scales with capability growth | Capability grows but behavior boundaries remain stable |

| Debugging by guessing | Abnormal behavior can be traced back to principles |

In one sentence:

SOUL.md is key to preventing Agent from "becoming less human and more script-like over time".

IDENTITY.md: Clarifying "What I Am, What I'm Not"

What It Defines

IDENTITY.md solves the role ambiguity problem, such as:

- I'm an execution-type Agent, not a decision-making Agent

- My responsibility is "providing solutions", not "making final calls"

- I serve individuals / teams / systems, not all requesters

Why It Must Explicitly Exist

In long-term conversations or complex tasks, Agents easily experience:

- Role drift

- Permission misalignment

- Self-assumed capability boundaries

If identity isn't clearly written, the model will auto-complete an identity it "thinks is reasonable".

With / Without Comparison

| Without IDENTITY.md | With IDENTITY.md |

|---|---|

| Agent acts like assistant sometimes, boss other times | Stable behavior, consistent expectations |

| Easily oversteps with suggestions or execution | Automatically rejects out-of-role actions |

| Users must repeatedly correct | Define once, effective long-term |

In one sentence:

IDENTITY.md transforms "who you think you are" into "who the system knows you are".

USER.md: Turning "User" from Input into Fact

What It Defines

USER.md is not chat history, but a long-term effective user profile:

- Goal preferences

- Decision-making style

- Acceptable risk levels

- Common contexts

Why Context Window Alone Isn't Enough

Context window is:

- Temporary

- Easily lost

- Not structurally reusable

While USER.md is:

- Stable

- Version-controllable

- Shareable across multiple Agents

With / Without Comparison

| Without USER.md | With USER.md |

|---|---|

| Agent treats every interaction like the first time | Behavior increasingly aligns with user |

| Repeatedly explaining preferences | Preferences defined once |

| Difficult long-term collaboration | Can form "tacit understanding" |

In one sentence:

USER.md upgrades Agent from "can chat" to "understands you".

HEARTBEAT.md: Letting Agent Know "What It's Currently Doing"

What It Defines

HEARTBEAT.md is a type of runtime memory, not historical memory:

- Current progress step

- Whether last run was abnormally interrupted

- Whether unfulfilled promises exist

- Current priority level

Why It's Important

Agents without heartbeat state:

- Cannot recover after crashes

- Easily forget during multitasking

- Struggle with long-term tasks

HEARTBEAT.md gives Agent the engineering characteristic of "resumable after interruption".

With / Without Comparison

| Without HEARTBEAT | With HEARTBEAT |

|---|---|

| Task lost on interruption | Can resume |

| Cannot determine current state | Transparent state |

| Difficult to monitor | Easy to observe |

In one sentence:

HEARTBEAT.md is key to transforming Agent from "one-time tool" to "continuous system".

Skills / Subagents: Turning Capabilities into Modules, Not Prompts

Problems They Solve

Not "can it be done", but:

- Are capabilities replaceable

- Are they testable

- Are they composable

Benefits

- Capability upgrades don't affect overall behavior

- Problems can be traced to specific modules

- Can be dynamically orchestrated by task

Agents without skill modules eventually become giant prompt black boxes.

The Most Important Point

The significance of these files is:

Transforming Agent's "implicit assumptions" into "explicit contracts".

- For models: constraints

- For developers: documentation

- For systems: security boundaries

Conclusion

Truly usable AI Agents don't rely on how large the model is,

but on whether you've clearly written what needs to be written.

Running is easy,

Running long, running stable, staying on track - that's engineering.